UNISURF:

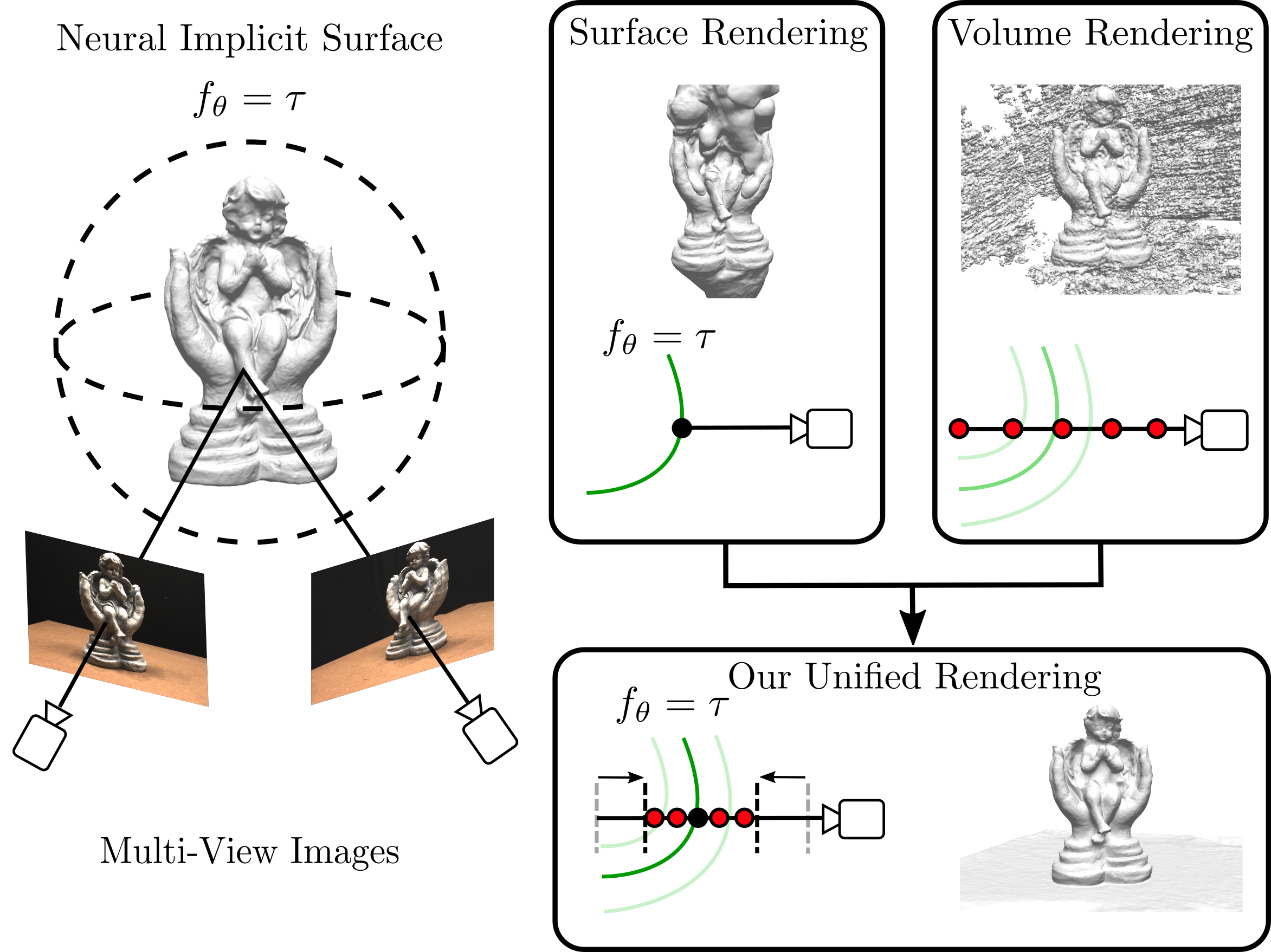

Unifying Neural Implicit Surfaces and Radiance Fields for Multi-View Reconstruction

Abstract

Neural implicit 3D representations have emerged as a powerful paradigm for reconstructing surfaces from multi- view images and synthesizing novel views. Unfortunately, existing methods such as DVR or IDR require accurate per- pixel object masks as supervision. At the same time, neu- ral radiance fields have revolutionized novel view synthesis. However, NeRF’s estimated volume density does not admit accurate surface reconstruction. Our key insight is that im- plicit surface models and radiance fields can be formulated in a unified way, enabling both surface and volume render- ing using the same model. This unified perspective enables novel, more efficient sampling procedures and the ability to reconstruct accurate surfaces without input masks. We compare our method on the DTU, BlendedMVS, and a syn- thetic indoor dataset. Our experiments demonstrate that we outperform NeRF in terms of reconstruction quality while performing on par with IDR without requiring masks.

TL;DR: Unification of Occupancy Networks and Neural Radiance Fields for 3D reconstruction.

Results for the DTU MVS dataset

Video

Citation

Bibtex:

@inproceedings{Oechsle2021ICCV,

title = {UNISURF: Unifying Neural Implicit Surfaces and Radiance Fields for Multi-View Reconstruction},

author = {Oechsle, Michael and Peng, Songyou and Geiger, Andreas},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2021},

doi = {}

}